Metadata has evolved dramatically in recent years, from straightforward descriptions to supporting complex usage in today’s asset management systems. Now AI promises a great leap forwards.

If you wished to select one particular area of endeavour capable of providing a marker for the overall evolution of broadcast technology, then you could do far worse than choose metadata.

Underlining the dramatic expansion in the number and variety of platforms that broadcasters are typically obliged to support, the story of metadata is one of escalating complexity as organisations strive to manage ever-increasing quantities of content.

No one now underestimates the importance of maintaining a scrupulous approach to metadata if broadcast operations are to remain smooth and seamless.

As Avid director broadcast and media solutions marketing Ray Thompson remarks: “It’s all about the metadata when it comes to properly capturing, ingesting or importing content into an asset management system.”

Primestream chief operating officer David Schleifer charts the recent trajectory of expectation, observing that “the need for metadata has evolved as broadcasters have gone from a manual process of entering keywords and markers based on what a person logs, to live data feeds that carry pre-set metadata.

“This metadata can be accessed by users in an easy to use interface with lists and buttons that can be clicked on, instead of manual search and entry.

”The addition of data feeds introduces metadata schemes that can help standardise media libraries, while speeding up the time it takes to leverage metadata, as well as eliminating typos often found in manual metadata entries which affect your ability to find media further down the line.”

“With the need for faster and more efficient turnaround, we’re finding broadcasters want to leverage AI” - David Schleifer, Primestream

With automation continuing to bring greater benefits to metadata applications, it’s no surprise to discover that artificial intelligence (AI) is – to a greater or lesser degree – expected to herald the next great leap forward.

Hence Schleifer notes: “With the need for faster and more efficient turnaround, we’re finding broadcasters want to leverage AI to add metadata to video and to allow production teams to effectively focus on storytelling the creative processes more than ever.”

The primary challenge for vendors providing metadata management technology, therefore, is to support the way customers wish to work today – and also look ahead to accommodating the probable AI-informed workflows of the near-future.

Manage, automate, monetise

Dalet director of product strategy Kevin Savina confirms that the market continues to move towards “greater density [of metadata] and also depth in the way it is being used.” Overwhelmingly, broadcasters are working to “distribute their content to a much wider audience,” and to many different platforms – and as such, one of the key themes for Dalet “is to help our customers in that transition and adaption, and to leverage the opportunities that come with this.”

Many of the company’s latest developments revolve around the Dalet Galaxy Media Asset Management (MAM) platform. The latest evolution, Dalet Galaxy five, offers component-based IMF workflows, extensive third-party integration and native support, paving the way for easier fulfilment of national and international distribution requirements.

In addition to ongoing activity around “timecoded metadata and the preservation through the editing process of the genealogy of metadata”, Dalet also continues to focus on the expectations of “tools used to manage workflows and automate them”.

And then, of course, there is the not-so-small matter of AI, with Savina forecasting that, industry-wide, “the addition of AI-generated metadata will be one of the major trends through 2019 and into 2020.”

Schleifer highlights the ability of Primestream Logging applications to offer metadata-tagging tools for live or pre-recorded video: “The tool’s tightly integrated and configurable user interface enables content loggers to tag video easily with defined metadata as it comes into the Primestream Media Asset Management environment – making assets easier to manage, automate and ultimately monetise.

“The solution is also tightly integrated with data feeds like STATS and the Associated Press so we can improve the speed and accuracy of gathering metadata, while Primestream also offers APIs to integrate AI solutions to further improve the speed, types and quality of metadata tagging in production workflows.”

Avid is another vendor much concerned with the need to facilitate the flexible automation of metadata. Hence MediaCentral l Cloud UX, remarks Thompson, “supports a growing number of third party on-premise solutions which can enhance a media library through automated metadata tagging.

One of Avid’s unique offerings within MediaCentral l Cloud UX is phonetic search. Based on phonemes, users can type in multiple words or phrases, even if they are not spelled properly, and MediaCentral l Cloud UX does a dynamic search based on the entered criteria. It will return the media with marks identifying exactly where the word or phrase is used in that clip.”

“We are focusing a lot on AI and being able to integrate with different solutions… looking to provide a form of basic metadata suggestion tool” - Parham Azimi, Cantemo

Other developments include the creation of a number of apps for deployment with MediaCentral l Cloud UX that can be used to monitor social media feeds. There is also support for Microsoft Cognitive services, “allowing users to leverage speech to text, as well as scene and facial recognition, to auto-index their library.”

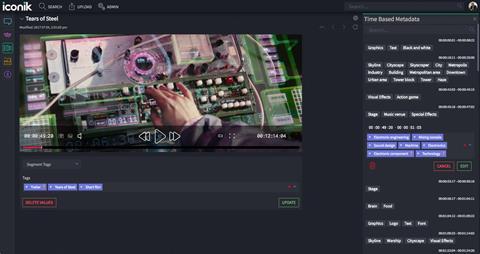

For Cantemo many of its activities in this area centre upon the iconik hybrid-cloud media management platform and Cantemo Portal media asset management solution. For example, as of version 3.4 of Cantemo Portal, users are able to display one metadata field on several subgroups – a feature destined to be especially pertinent to those responsible for managing separate content with the same access rights.

Looking ahead, Cantemo CEO Parham Azimi says that “we are focusing a lot on AI and being able to integrate with different solutions. We are also looking to provide a form of basic metadata suggestion tool.”

But whatever the future brings the emphasis will continue to be on “providing tools that give our customers the freedom to create any kind of metadata set… We don’t want to dictate what their metadata should look like.”

Deepening demands

No matter what the eventual impact of AI on metadata creation – and, as one would expect, opinions regarding the nature and timescale of rollout do vary – no one would dispute Thompson’s assertion that “the amount of data is only going to increase. [For example] IoT devices can now connect and deliver data to enhance incoming media over IP from mobile devices, wearables, cameras, satellite, fibre and more.”

“Having the tools in place to accept all incoming feeds and the metadata that comes with it from multiple sources is critical” - Parham Azimi, Cantemo

So for media companies across the board, “having the tools in place to accept all incoming feeds and the metadata that comes with it from multiple sources is critical. It affects their ability to react quickly to ever-changing situations in news, sports and entertainment.

Social media will continue to play a critical role going forward as user-generated content becomes increasingly important to tell a story as it unfolds.”

Indeed, as 5G mobile services start to achieve traction, and the amount and variety of content that can be “passed back and forth” evolves, metadata will also play a determining role in how consumers interact with media. “And when combined with social platforms and technologies like VR,” predicts Thompson, “entertainment will be taken to a new, more personal level.”

No comments yet