ABSTRACT

In conventional HTTP adaptive streaming (HAS), a video content is encoded into multiple representations, and each of these representations is temporally segmented into small pieces.

A streaming client makes its segment selections among the available representations mostly according to the measured network bandwidth and buffer fullness. This greatly simplifies the adaptation algorithm design, however, it does not optimize the viewer quality experience.

Through experiments, we show that quality fluctuation is a common problem in HAS systems. Frequent and substantial fluctuations in quality are undesired and cause dissatisfaction, leading to revenue loss in both managed and unmanaged video services.

In this paper, we argue that the impact of such quality fluctuations can be reduced if the natural variability of video content is taken into consideration.

First, we examine the problem in detail and lay out a number of requirements to make such system work in practice. Then, we study the design of a client rate adaptation algorithm that yields consistent video quality even under varying network conditions.

We show several results from experiments with a prototype solution.

Our approach is an important step towards quality-aware HAS systems. A production-grade solution, however, needs better quality models for adaptive streaming. From this viewpoint, our study also brings up important questions for the community.

INTRODUCTION

In an HAS system, a single master high-quality video source is transcoded into several streams, each with a different bitrate and/or resolution. These streams are called representations, and each of the representations is temporally chopped into short chunks of a few seconds, where each chunk is generally independently decodable. These content chunks are called segments.

The MPEG’s Dynamic Adaptive Streaming over HTTP (DASH) standard [1] supports both physical and virtual segmentation of the representations.

The generated segments and the manifest file that provides detailed information about the content features, representations and segments are posted on an HTTP server. The streaming clients then use the HTTP GET requests to fetch the manifest file and the segments from the server.

Segmentation is essential to support live (linear) content delivery, as HTTP is an object delivery protocol and the use of segmentation allows us to generate the segments (i.e., objects) in real time.

If the content is not segmented, it cannot be delivered linearly. In this case, the content can only be delivered after it is finalized, and one can use the traditional progressive download method to download the entire content with only one HTTP GET.

When segmentation is done for multiple representations, the streaming client also gets the capability of adapting. At segment boundaries, the client can decide to stick with the same representation, fetch a segment from a higher quality representation, or fetch a segment from a lower quality representation.

The decision depends on a number of factors including, but are not limited to, server/network performance, client conditions and the amount of data available in client’s buffer. Typically, tens of seconds of content is buffered at the client to accommodate unpredictability of the network conditions.

A common practice among the streaming providers has been to run the transcoders in the constant bitrate (CBR) mode, resulting in more or less equal segment sizes and durations for a given representation. That is, all the equal-duration segments produced out of a single representation would have the same size in terms of bytes.

This simplified the rate adaptation algorithms for the client developers and helped them minimize the chance of a buffer underrun.

However, most content types are not suitable for CBR encoding since they exhibit a large variation in complexity in the temporal domain. Thus, during a session, even if the network conditions do not change, a naive client that streams CBR-encoded segments may easily experience quality variation when switching between a high-motion or high-complexity (e.g., explosion) scene and a low-motion or low-complexity (e.g., static) scene.

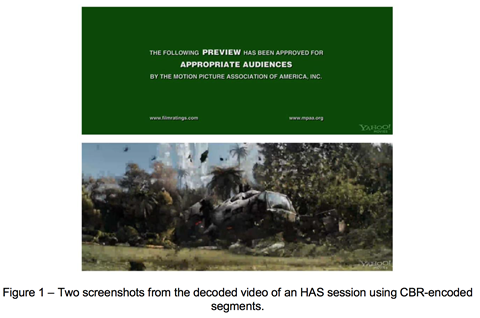

For example, in Figure 1, we show two screenshots from a decoded video of an HAS session streamed over a constant-bandwidth link. The first screenshot is from the preview title, which is static and has low complexity. The second one is from a fairly complex and dynamic scene. Not surprisingly, CBR-encoded segments yield a much lower visual quality in the bottom screenshot than the above one.

DOWNLOAD THE FULL TECH PAPER BELOW

Downloads

Ali begen “quality aware http adaptive streaming”

PDF, Size 0.59 mb

No comments yet