As consumers demand more personalisation, metadata about individual users is emerging as a key resource for the industry, writes Adrian Pennington.

Big Data was once touted as the oil that will fuel future media but it is bite-sized information, the metadata about individual viewers which is arguably the more valuable resource.

Granular insights about our viewing habits are culled from the vast volume of data which broadcasters are being encouraged to syphon if they have any chance of competing with the masters of digital analysis, Netflix, Amazon and Google.

“The biggest challenge the industry faces in the drive to optimise the user experience is understanding available data, predicting viewers’ motivations, and ultimately using this data to create viewer-tailored experiences,” says Peter Szabo, department lead UX/UI at solutions provider 3SS.

The biggest challenge the industry faces… is understanding available data to create viewer-tailored experiences” Peter Szabo, 3SS

The desire for content and service personalisation becomes clear when you ask people. According to research carried out in April by Edgeware of more than 6,500 adults across the UK, US, Hong Kong, Mexico and Spain, the vast majority (89%) expressed interest in watching TV content aimed at their personal interests.

The research also found that 68% of consumers would be more likely to watch a traditional TV channel if programming was more tailored to their personal preferences.

“Personalisation solutions are transforming the viewing experience allowing audiences to receive suggestions for programmes based on previous viewing habits,” says Julian Fernandez-Campon, CTO at asset management company Tedial.

Data has already taken root in advertising where dynamic targeted ads based on user profiles, buying patterns and internet searches are augmenting broad commercial branding.

- Read more: Spend on cinema ads to grow by 6.8% in 2019

Now it’s being used to personalise the user interface.

Imagine that the service provider knows that a user wants to browse through the latest sports events, likes action movies, and that they are an Arsenal fan. Based on this, Szabo suggests the provider could show two stripes on the UI; one displaying recent sports events, alongside another displaying a range of action movies to choose from. Ideally, the background would be a spectacular goal from the last Arsenal match, and the accompanying colour scheme could match the home colours of the Gunners.

“The problem is that we don’t have this data readily available, so we need to predict this,” he says.

Consequently, using machine learning to create audience segments or personas has become one of the most crucial research topics in UX. If users can be categorised based on their behaviours, interfaces can be tailored to their needs, ultimately creating the ideal, custom journey for each viewer.

“This categorisation problem is now solvable, thanks to the research related to deep neural networks,” Szabo contends. “3SS have created a mathematical formula to describe this television experience success. Ultimately, we want the user to find the entertainment they want in a fast and straightforward way, without any blocks.”

Voice and mood

Speech interaction is being used across a number of applications thanks to the prevalence of virtual personal assistants.

“Voice can easily be translated to text but carries also a lot more information if extracted and analysed,” says Muriel Deschanel, business development director for Hypermedia at research institute b<>com. “Studies show that age, gender, emotional state can be inferred from the user voice.”

Being in a bad or good mood heavily influences what content people want to watch. What if Alexa could tell when you are sad and adapt its response accordingly?

“Voice can easily be translated to text but carries also a lot more information if extracted and analysed” Muriel Deschanel, b<>com

“ML techniques can be applied to develop an understanding of viewer behaviour based on time of day or even tastes for particular content,” Deschanel says. “This can then be used to present a UI that displays content that the viewer will most likely want to watch.”

Ruwido has developed a system that detects a user’s mood while interacting with ambient voice assistants. The information is used to feed into a recommendation engine that proposes content depending on the mood.

“The main challenge is to understand if people are willing to accept such a system,” says Regina Bernhaupt, Ruwido’s head of scientific research. “We also need to come up with ways to visualise mood-based recommendations in the UI.”

Live and VOD content can now be stitched together and presented as a traditional TV channel, while at the same time being personalised.

“These channels could consist of content that’s been selected based on viewers’ interests, demographic or location, enabling the development of innovative campaigns and offerings to attract new users and create revenue streams,” says Johan Bolin, CPTO Edgeware.

Such an approach might seem at odds with the mass transmission and public service remit of some broadcasters but the BBC is attempting to square the circle by evolving a new broadcasting system based on IP and interactivity.

“Could we move from a one-to-many, broadcast style of media to a many-to-many, Twitch or YouTube-style of media?” poses BBC R&D.

Also known as object-based broadcasting (OBB), the BBC has been working on the concept for years and is at the stage where it needs to scale the technology without increasing cost.

It suggests interactive content (such as the narrative branching created for BBC technology series Click could be delivered through a future version of a service like iPlayer.

The BBC is not alone in this endeavour. BT Sport is developing plans for OBB which will enable viewers to personalise and control some aspects or objects of programmes, such as audio or graphics.

Example applications—which could even debut this season for the broadcaster’s coverage of the English Premier League— include the ability to control stadium, crowd noise levels and commentary.

Automation augments sports

“Viewers will soon be able to create customised game clips such as a highlight reels of all the goals, or pull player-specific stats and clips,” says Scott Goldman, Director of Product Management, Verizon Media. “Broadcasters can use their knowledge of fan preferences and deliver tailored news updates, documentaries, commentary following a live broadcast, prolonging their engagement with the stream.

“Think of it like a personalised sports programme that just delivers up all the relevant content for your favourite sport or team.”

Data and statistics fed into AI-enabled engines will automate much of this process.

“If you add speech-to-text capabilities, operators can search for comments made by commentators during any live event automatically,” says Tedial’s Fernandez-Campon. “These additions enable production teams to significantly increase their output and leaves them more time for creating personalised stories.”

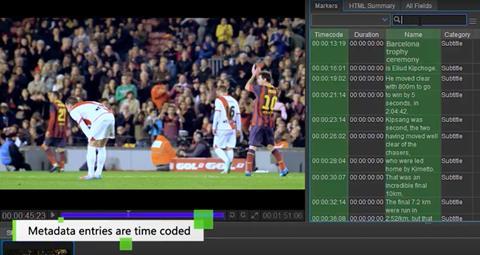

The ease with which video content can be tagged with metadata at inception enables workflows that are more centred on the content than the technology of live production.

“The ability to combine metadata generation with timecode information further speeds and simplifies the search process, enabling producers and the AI to locate the exact content down to the video frame,” David Jorba – EVP, European MD at TVU Networks. “From there producers can customise content production tailored to individual audience preferences and platforms.”

For example, Sky Sports live chat show, The Football Social, uses the cloud-based TVU Talkshow solution for bi-directional remote audience participation.

“Fans got to be part of the show in real-time using their smartphone camera, directly from the stadium as the game was being played,” says Jorba.

Motorsport Griiip has made data collected from the race cars, tracks, and from airborne drones, of its G1 series a priority.

“By analysing the data coming from the vehicles and drivers using AI and deep analysis, Griiip provides all this information to viewers in an engaging, storytelling and easy-to-understand format,” says Ronen Artman, VP of marketing, LiveU which partners Griip in its endeavour.

“While it’s now accepted that we can search for video content at instantaneous speed, the next step will be automated content production for social media platforms where only short clips are needed” David Jorba, TVU Networks

At IBC2019, LiveU is revealing a “significant expansion” of this approach by marketing the data-based collection, editing and distribution platform as an “affordable” plug and play solution to other sports.

The industry is however, still in its early stages of embracing personalisation. “Only a fraction of today’s toolset is being used,” Jorba says. “While it’s now accepted that we can search for video content at instantaneous speed, the next step will be automated content production for social media platforms where only short clips are needed.”

Tailored TV is just a data-point (or two) away

If metadata were sophisticated enough, content and advertising could be as individual as a fingerprint.

“For many consumers, recommendation tools may feel like something of a blunt instrument, missing the mark by recommending increasingly similar content or products which they have already purchased,” says Jonathan Freeman, founder of strategy consultancy i2 media and Professor of psychology at Goldsmiths University.

i2’s research suggests that the industry should adopt new personalisation techniques with caution to ensure users do not feel aggressively targeted.

“An idea generated from our consultation which could enable innovation while maintaining user trust is the creation and adoption of a Unified User ID,” says Freeman. “A data-bank owned and controlled by the user which supports sharing-data across platforms, with options to disable if preferred.”

Emotional response

Privacy is especially pertinent as emotional inferences based on viewed content become feasible. ESPN and The New York Times have reportedly trialled emotional targeting of ads. The ability to tailor content in a way which feels intuitive to the consumer is becoming ever more likely.

“We believe that the only way to truly understand human emotions and other cognitive states, in-the-wild, is by analysing multiple modes of human data together,” says CEO & co-founder of Sensum, Gawain Morrison.

The Belfast-based outfit has researched use of data from a variety of biosensors including EEG headsets, smart watches, eye-scanning heat maps and heart rate monitors – anything that triggers emotion - to build Synsis, an ‘empathic AI’ that can be trained to understand users and respond appropriately.

Germany’s Fraunhofer provides facial coding software to Sensum. This measures emotional expressions from the face by analysing the video from a camera such as a webcam. Another firm, audEERING. provides software that measures emotions from the user’s voice. It processes both spoken language and the ‘paralanguage’ used between words, such as grunts and sighs. Sensum also works with Equivital, maker of a chest-worn sensor array that provides medical-grade physiological data such as heart rate (ECG), breathing rate and skin temperature. All this data is fed into Sensum’s algorithms for interpreting human states from physiological signals.

AI start-up Corto goes further. It’s attempting to map every attribute of a piece of content including values such as white balance and frame composition to the emotional reaction a viewer has on watching it. It is doing so by using MRI scans to literally hack the brain.

“We will use MRI scans to measure brain activity to infer what emotional response a character or narrative has,” explains Yves Bergquist Corto’s CEO. “That really is the ultimate - there is no greater level of granularity beyond this.”

No comments yet