The gap between OTT and broadcast is diminishing, with reductions in latency improving user experience and bolstering use cases in areas such as sports, gambling and even military video.

The business case for OTT TV is about to get much stronger, thanks to a slew of low-latency distribution solutions which promise to make broadcast equivalency the new norm while accelerating the coming to market of a the next generation of speed-sensitive services.

As was revealed at IBC2019 many suppliers have broken through to new levels of performance, often in conjunction with software-as-a-service (SaaS) offerings. This is being done by leveraging the processing power of commodity appliances at edge locations in combination with latency-reducing approaches to encoding, packaging and other innovations.

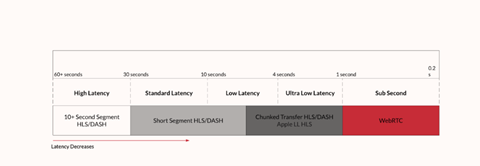

Industry vendors have been getting ever closer to enabling a true TV viewing experience for some time, with much of the progress to date resulting from use of latency-reducing mechanisms such as enhancements to UDP (User Datagram Protocol)-based transport embodied in the QUIC (Quick UDP Internet Connections) protocol and the Chunked Transfer Encoding (CTE) chunk-option used with MPEG’s CMAF (Common Media Application Format) standard.

Advances on display at IBC from suppliers including Harmonic, MediaKind, Red5 Pro and startup Eluvio as well as existing solutions from firms such as Anevia, Synamedia and Velocix are achieving end-to-end delivery at broadcast and, in some cases, sub-broadcast speeds.

Advances are also reducing bandwidth consumption while ensuring greater consistency in quality of experience (QoE). Almost all the solutions on display make it easier to support dynamic advertising and time-shift applications, including network DVR, catch-up and start-over.

The ‘Number-One Blocker’

“The number-one blocker to solving QoE in the transition to OTT is latency,” says Anevia CTO and co-founder Damien Lucas. “When Canal Plus asked customers in a recent survey what they like and don’t like about their OTT service, the number one response on the negative side was, ‘I don’t like the delay.’”

It makes sense that in the shared experiences environment of social media viewers don’t want to be hearing about things that people watching on broadcast saw 20 seconds earlier, Lucas says. “A Tweet takes about three seconds to reach people,” he notes. “If you’re watching a game on OTT, all your friends watching it on TV will be sharing what’s happening before you see it.”

Streamed sports programming is an especially sensitive cause for viewers’ concerns about latency, but the same issues apply to any significant moments in a linear TV broadcast, whether it’s the naming of an Oscar winner or the dramatic end to a crime drama. Anevia has succeeded in eliminating the problem with end-to-end latency that actually beats broadcast, Damien says, adding that the Anevia platform tweaks the timing to be in synch with broadcast.

“It’s very important to control the whole chain, including encoder, origin, packager and CDN,” he explains. “You need integration to achieve low latency. That’s the tricky part, and it’s a game changer.”

Needless to say, the elevation of OTT TV to equivalency with traditional live programming has major implications for MVPDs (Multiprogramming Video Programming Distributor) as they equip their networks to compete more effectively in an IP-dominated distribution environment. The new market realities have brought them to a strategic crossroads where they can either put these new solutions to use exclusively for in-house needs or they can seize the B2B monetization opportunities that come with supporting OTT competitors’ needs for low-latency distribution and dynamic ad insertion (DAI) at edge points.

“There are a lot of business connotations happening in this space that really represent an opportunity to take companies like ours out of our comfort zones into new areas,” says Sudeep Bose, vice president of product management at Harmonic. “There’s a lot that’s going to be done over the next 12 to 18 months as people rethink their business models.”

Multiple Challenges

Most of these new distribution platforms rely on established approaches to adaptive bitrate streaming.

Exceptions include the solutions on offer from Red5 Pro, which leverages the peer-to-peer protocol WebRTC (the open-source project that provides web browsers and mobile applications with real-time communication via simple application programming interfaces) along with other mechanisms to get to sub-second end-to-end latency, and Eluvio, which eliminates the multiple processing steps from postproduction playout through various points of distribution to end users.

They all capitalize on the high-power processing and multi-application capabilities of all-purpose multi-core chipsets. But there are a lot of issues to weigh when it comes to choosing a platform that works for any given distribution scenario.

For example, the uncertainties of traffic patterns associated with live event programming pose a major challenge for entities with a heavy sports schedule, says Velocix CMO Jim Brickmeier. “Everybody says their solutions are scalable, but there’s a lot of complexity involved in putting together a cost-effective approach to achieving scalability with OTT delivery of live sports,” he says.

That’s because, to avoid spending on largely unused extra capacity in private clouds, operators need to be able to rely on public cloud resources, which is hard to do without incurring delays that are unacceptable with live sports distribution.

“We’re working with our customers to explain how they can achieve ultra-low latency with a compute architecture that pushes processing deeper at the edge while extending into the public cloud to handle the spikes that are common to live streaming,” Brickmeier says.

“You have to do this cohesively without losing QoS. As we’re showing with large customers like Vodafone and Liberty Global, flexibility at scale with the ability to maintain ultra-low latency is a big differentiator for us.”

Closer to the Edge

Market demand for scalable, cost-effective low-latency distribution solutions has prompted Harmonic to go where it’s never gone before by offering a managed OTT delivery service as an extension of its cloud-native VOS video processing portfolio. The service enables low-latency streaming of live sports content in SD, HD and UHD formats by guiding traffic through network nodes provisioned by Harmonic to serve as a cost-saving complement to existing CDN infrastructures.

“We’re partnering with ISPs to position our nodes out to the edge of networks as close as we can get to end users. It’s something that’s new to us, which we’re doing because we feel it’s critically needed by our customers,” says Harmonic’s Sudeep Bose.

Even some of the largest MVPDs are moving to the SaaS model, he says. “But it’s the newer ones and some of the Tier 2s and 3s who can’t easily move to OTT on their own that are especially drawn to this,” he says.

The company pairs the high-efficiency encoding and packaging processes of VOS with processing for unicast streaming from the edge nodes to support real-time scalability for live video services at end-to-end latency in the five-second range. Reacting to incoming data gathered from CDNs, nodes and modems on a second-by-second basis, the service controller is able to direct each stream over whatever turns out to be the fastest path at any point in time. In instances where traffic volume targeted to any given service area exceeds the capacity of the edge nodes, the delivery controller directs the traffic to whatever partner CDN facilities are best suited to sustaining low-latency delivery.

WebRTC and CTE explained

WebRTC (Web Real-Time Communications (WebRTC) is a peer-to-peer standard developed by the IETF and W3C to allow users with WebRTC-enabled browsers to access robust voice and video communications apps embedded in Web pages from any type of Internet-enabled device over any type of network without special plugins and installs. Red5 Pro, capitalizing on the real-time communication attributes and the fact that all major browsers now support the protocol, has created a platform optimized for real-time video streaming, which since its introduction over two years ago has been regularly upgraded with advancements suited to the needs of big M&E as well as smaller niche distributors.

This represents a radical departure from traditional ABR streaming where the packaging for HLS or MPEG-DASH (Dynamic Adaptive Streaming over HTTP) delivery over HTTP-based TCP (Transfer Control Protocol) connections prevents latency reduction to what can be considered real-time communications speeds. WebRTC, utilizing multiple protocols and JavaScript APIs to enable instantaneous adjustments in peer-to-peer connectivity during the streaming session, ensures the fastest paths are found for each content fragment at optimal bitrates suited to the display capabilities of each device.

CTE (Chunked Transfer Coding)

CTE is an option used with CMAF that cuts end-to-end latency on linear content through an approach to segmenting or “chunking” fragments that eliminates the need to use an IDR frame with each chunk while enabling delivery of chunks sequentially through CDNs to clients for playback without waiting for the whole fragment to be transmitted.

The Harmonic delivery module is one of six virtualized microservices comprising the VOS platform, which also includes ingest, playout, graphics, transcoding and encryption microservices that can be implemented wherever compute resources are available.

MediaKind is pursuing a similar strategy with its distributed Media Edge modules, which operate in synch with its private or public cloud-hosted origin Video Storage and Processing Platform (VSPP). Incoming content from contribution sources is ingested and transcoded to mezzanine formats at the VSPP and streamed out to the Media Edge nodes, which perform the just-in-time formatting, streaming and encryption for unicast delivery to each viewer, says James Hart, a senior sales engineer at MediaKind.

“Our Media Edge runs next to, not on top of CDN facilities,” Hart says. In the case of non-linear content and implementations of time-shift storage with linear content. the edge-based processing capabilities make it possible to store just one copy to serve all devices in the service area. Just-in-time packaging at the edge also supports dynamic advertising with MediaKinds’ DAI (Dynamic Ad Insertion) solution on linear as well as on-demand streams.

Hart says the avoidance of delays incurred through traditional approaches to capturing, encoding, packaging and transporting content to CDNs in combination with the tight integration between the VSPP and Media Edge nodes is cutting end-to-end latency to between three and seven seconds. MediaKind, like other vendors, is also cutting last-mile latency at the edge through packaging that utilizes CMAF’s CTE and efficient approaches to transcoding that eliminate ABR (adaptive bitrate) profiles that aren’t relevant to receiving devices.

Achieving Sub-Second Latency

Going even farther with latency reduction, Red5 Pro has demonstrated end-to-end distribution in the range of 500 milliseconds or less. According to Red5 Pro CEO and founder Chris Allen such speeds are mandated as things like multi-player gaming, sports gambling, auctioning and use of video in military operations enter the OTT space.

“We’re tuned into a client services model with our players using WebRTC,” Allen says. “We create peer connections to browser clients and deliver streams over UDP.”

“CMAF with CTE has reduced latency on ABR streaming to as low as a couple of seconds,” Allen notes, “but that’s not enough for services that require real-time interactivity.”

The Red5 Pro architecture employs origin servers in core locations, where content is ingested, encoded and packaged in the UDP format for distribution across a hierarchical cluster of relay streamers and edge servers. By enabling each relay to restream a single stream from the origin in multiple outputs to edge servers, the system is able to scale to millions of simultaneous users without overwhelming the capacity of the origin servers, Allen explains.

The company relies on public cloud facilities to host origins and enable automatic scaling with the addition of relays as needed. It has introduced blockchain technology into its management system to streamline the process of adding new instantiations of the platform in multiple cloud locations. “Partners can deploy nodes and earn money right away without going through heavy contractual and reporting procedures,” Allen notes.

Among new capabilities on tap for introduction in January is a “360 Player,” which will support streaming from 3600 cameras.

“Eventually we believe this method of delivery will replace broadcast,” Allen says.

This article originally appeared on Screenplays Magazine. It has been edited for IBC365

No comments yet