MPEG-I coding performance in immersive VR/AR applications

This paper provides a technical/historical overview of the acquisition, coding and rendering technologies considered in the MPEG-I standardisation activities for immersive applications.

Read the full article

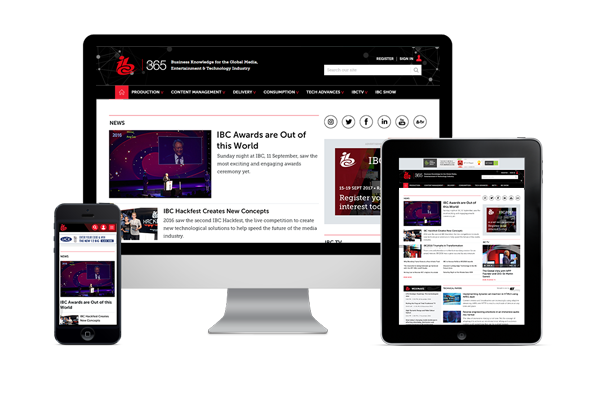

Sign up to IBC365 for free

Sign up for FREE access to the latest industry trends, videos, thought leadership articles, executive interviews, behind the scenes exclusives and more!

Already have a login? SIGN IN