In this second part of an IBC365 focus on becoming cloud - and micro services - native, we follow on from what vendors Grass Valley and Avid said in part one, and look to the umbrella under which all broadcasters gather to resolve the great technology issues – the European Broadcast Union (EBU).

Some 80% of cloud technology used by broadcasters comes under the headings of private off-premise, private on-premise and hybrid, but within four years the dominant headings will be public cloud and private off premise cloud services.

Jean-Pierre Evain runs the EBU project Metadata Information Management and Artificial Intelligence (the many supporting broadcasters include RAI, VRT and NHK) and has a target for the future potential of AI in cloud applications.

“We are evaluating AI and its uses in terms of business needs and operational pertinence,” he says.

This is all about live speech to text and natural language processing (NLP), real-time translation, text/data to speech (sport and accessibility), automatic logging in sport, automated summarisation, face recognition and identification.

“We are also looking at AI tools to address fake news,” says Evain. “The tools list could grow very long. Of course, we are also looking at the integration of these tools as micro services into the workflow. We have renamed FIMS as MCMA (Media Cloud and Micro-service Architecture) with a primary focus on AI using a hybrid workflow of onsite, AWS and Azure services.”

Broadcasters have been open to AI for years, and the EBU has worked on automated metadata extraction (AME) for the same period. What is the big difference now?

“People are suddenly getting excited about AI, and when you ask them what they are doing they answer, ‘Oh, I am doing AME’,” says Evain. “Why didn’t they do it with us since we’ve been working on it?

“People ask a lot of questions about what the majors do, and what the big companies like Google do with their content. They have the feeling that [the majors] are using their content to train their tools,” he adds.

These giants have no competition, so the malcontents will have to pay to access their tools even more as time passes.

“I get many questions like this” continues Evain. “You can also have on-site solutions from different vendors, and then there are the people who can develop their own tools, like NHK and VRT. They develop tools but want to combine them with all the possibilities around them, whether it is from vendors or services from the cloud.

“This is why we suggest that for a better integration into your workflow you need to look at common interfaces, or at least best practices,” he adds. “That is where our activities around FIMS and CMA come into play.”

Evain continues: “We realise that the level of education and expertise is very low. Some people are thinking, ‘I want to do some cloud, but I don’t want to do services’, but the cloud is based on services.

“We think it is vital that people get interested,” he adds. “People seem to understand AI, but then seem completely confused by what micro services and services represent. [However] there is such an excitement about AI, and we are trying to surf that wave.”

‘Some people are thinking, ‘I want to do some cloud, but I don’t want to do services’, but the cloud is based on services’ – Jean-Pierre Evain, EBU

Machine language

The implementation options for realising AI include machine learning and deep learning plus neuronal networks; they will have an impact on how you train a tool with an algorithm to give better results specific to your application.

“Broadcasters will go for it,” says Evain. “They are in the investigative phase, but they do have operational concerns around onsite versus cloud AI tools. This is why, through MCMA, hybrid workflows will be the most likely common integration in the future.”

The matter of AI travelling up the chain for automation purposes is all about data. “Eventually you have to initiate processes to execute. You can define/make recommendations, or AI can be used somewhere else up the chain to extract metadata, but what is travelling up the chain is data,” says Evain. “In the case of recommendation and personalisation, AI has the final say as it is actually processing a lot of data.”

Evain’s group is working on AI-based solutions for automatically producing highlights and summaries in sport.

“We want to keep timed metadata associated with content,” he says. “We have been working on the European Championship, where we tried to automatically generate daily highlights. We are looking at the automatic logging of content and are already between a partial and full solution.”

EBU members using one of these solutions get an open source gem and advice on introducing it to their workflow.

“People want assistance and speed without compromising content quality or editorial integrity,” he says. “Broadcasters should not overrate expectations, and not leave it all in the hands of AI tools. For example, you cannot get rid of all the loggers because there could be exceptional events and you need somebody able to spot them.”

Shakes down the backbone

Willem Vermost manages an EBU project titled Cloud Technologies for Media Production Around IP and Remote Content Creation. He first considered the notion that the large numbers of lift-and-shift moves were due to people wanting first to transition to IP.

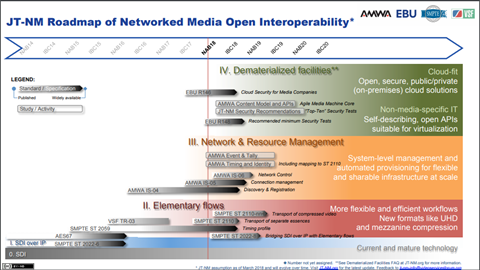

“They don’t think about cloud architecture,” he says. “That is a little bit too fast, so IP is probably first. The JTNM [Joint Task Force on Networked Media] roadmap says to use cloud technology and be as flexible as possible, but we are not there yet. We are speaking about true production and not about playout, which already goes well with cloud.”

Regarding a full cloud migration point, Vermost was not sure about timing but sees plenty of serious trials. He had in mind the internet network card providers, making it possible to pace traffic.

“If you move from a box to software the issue is that the further you are away from the physical wire, the harder it gets to cope with the certain traffic shapes that are being dictated by SMPTE 2110-21,” he says. “Now, there are network cards that enable this, so the software does not have to care about it.”

This will fast track many software implementations. There will be a lot more just running on an off-the-shelf server with an off-the-shelf network card.

“Fox did a few tests with VMware and there are still issues around getting that tweaked to cope with traffic shape,” says Vermost. “There are still some struggles in the backbone, but within the year we will be moving fast.”

Proper standard API

Vermost has a firm view of SMPTE ST 2110, now out and vital.

“It is not about discovery and registration and all those sort of things,” he says. “It is what the AMWA open specifications bring you, and then there are open APIs. Without those this is the perfect layer for a vendor lock in, so I am happy to see the big takes on of IS-04 and IS-05; and probably soon we will see IS-06.

“But if you think about the next layer and talk about virtualisation, maybe some of them want to use VMware and others want to use brands of virtualisation software, and there you have the same issue,” he adds. “You need a proper standard API to put up your virtual box and talk to it.”

Work will be done on the section of the JTNM Roadmap to take care of the common API for virtualisation. On the issue of the production or the workflow metadata, Vermost recalls his working time with VRT and a new initiative to collect metadata from all the processes.

“They are taking all the metadata of all services, and they use a bit of AI to do the prediction,” he says. “They can now visualise the whole production process and predict when a video piece will be ready to playout or be posted on the Web.”

The Vermost group has two strategic programs running – the production infrastructure group plus one called the New Buildings Initiative (BI) group.

“That BI group is looking at all the new buildings being initiated throughout Europe; there at least 59 big projects on my list where people are building a complete new facility,” he says. “They are looking at what technology they should use, and most are looking towards IP. There are a few proofs of concept being conducted as well.”

Vermost and his group are monitoring three projects right now.

“Within these programmes we followed SMPTE 2110 to make sure how it works, so our members will be well served by going to the new standard,” he explains. “We built a piece of software to check if 2110 streams are fully compliant.”

‘They can now visualise the whole production process and predict when a video piece will be ready to playout or be posted on the Web’ – Willem Vermost, EBU

AI rescues an archive

Vermost is looking closely at the things you can do with live uncompressed video.

“The connection cost is high, so you would like to compress and see a codec kick in here,” he says. “There is not a one standard codec, and if you look at the SMPTE process they are not keen to promote any one codec as the draft 2110-22 for compressed video.”

Looking at EBU members, Vermost had a surprise. “I was amazed by the results,” he says. “One example was RTS in Geneva, which used AI to resolve an issue with archiving. The metadata was not correct, and they calculated they would spend 20 years to correct it. They deployed two people full-time for a year to come up with an algorithm, and they will now be finished with the metadata problem next year. Saving 18 years is a spectacular project, and one fine example of the uses for AI.”

The prediction that hybrid and private on-premise data centres will vanish by 2022 is not a certainty.

“I think the first move EBU members make will be to centralise with a data centre,” says Vermost. “They will start to virtualise as much as possible inside the building, and then start to move out.”

No comments yet