What are the ‘good’ AI solutions available in the media industry today and how can they be integrated responsibly? These are just some of the questions contemplated by Rowan de Pomerai, CTO at DPP, following the organisation’s latest report on the AI landscape.

Generative AI’s biggest impact on the media industry last year was not a script written by ChatGPT, or a video created by RunwayML, or even a deepfake video. It was that it reset expectations of what AI means for professional media.

That reset manifests in three ways: reconsidering what’s possible with AI, recalibrating how far along the journey we are, and refocusing on the risks that come with these exciting new capabilities.

In the process of researching the DPP’s new report, AI in Media: What does good look like?, I found experts across the industry to be laser-focused on these same things: how to identify effective AI solutions available today, how to anticipate those we’ll see in the future, and how to adopt AI responsibly.

Read more What did CES 2024 reveal about the state of generative AI?

When considering the impact of generative AI, it is perhaps worth reflecting on the fact that attempts to automate creativity have been around for a very long time. Musician and composer Raymond Scott began work in the 1950s on what he would later call the Electronium, a device which applied a combination of rules and randomness to generate new music. Ultimately it didn’t take off, but it was successful enough at the time for Motown Records to purchase one to use as an ‘idea generator’ for composers.

But is this time different? Will generative AI revolutionise the way we create and tell stories?

Changing narrative

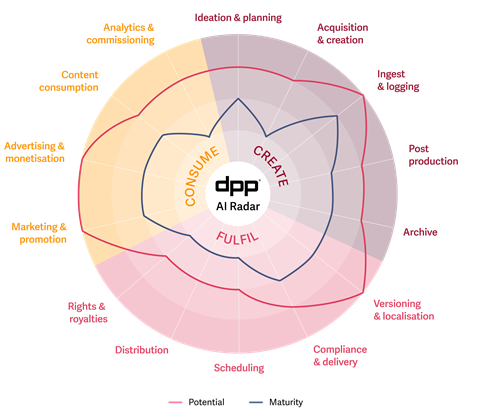

When it comes to our understanding of the impact of AI on creativity, the analysis we completed at the end of 2023 contrasted starkly with similar DPP analysis from the end of 2018. The 55 experts who contributed to the recent research estimated greater potential for AI in areas such as versioning and localisation, marketing, and advertising, when compared to 2018, indicating that the rise of generative AI has opened up our collective imagination as to what AI can do in these creative spaces.

But even more notable is that the maturity scores - that is, the assessments of how close current industry solutions are to realising the potential - have actually dropped for areas such as content acquisition and creation, and advertising. This isn’t because the industry has gone backwards, but rather that the finish line now seems so much further in the distance.

I couldn’t help but wonder: is this sudden excitement for AI’s potential in creative areas well-founded? Or is it just that the leaders we interviewed - mostly from operations and technology roles - overestimate the impact on their creative colleagues?

Perhaps there’s a grain of truth in both hypotheses. What we can say is that in the near future, the use of AI in professional media will likely be most effectively deployed in manipulating media rather than creating it from scratch. It’s telling that one of the highest areas of potential was marketing and promotion - an area which re-cuts programme content into trailers and adjusts them for different audiences and devices. Another was advertising - where targeting could be dramatically enhanced if AI can personalise and contextualise adverts at scale. And AI-assisted localisation has been a hot topic for a couple of years now - using AI to transcribe audio, translate text, and transform voices.

That’s not to say that AI won’t be used to generate content, of course. Anyone who creates stock footage might have felt nervous at the recent release of OpenAI’s Sora, for example. It seems certain that AI-generated shots will make their way into adverts quickly, and perhaps into TV shows soon enough. And without doubt, AI will aid creatives in content creation - whether speeding up colour adjustment, filling areas of an image with AI-generated content, or creating whole 3D worlds for virtual production.

And all the while, of course, a quiet revolution has already been taking place: the more prosaic forms of non-generative AI have marched towards maturity. The most prominent example is AI video tagging and metadata generation. When this capability first emerged almost a decade ago, it seemed that every vendor wanted to integrate it and extoll its virtues. But customers found it underwhelming at best, and bordering on snake oil at worst. Today, however, media companies are finding such capabilities to be more accurate, better implemented, and often rather useful.

There is no longer a question about whether AI will dramatically impact professional media. It will. In many ways, the more interesting questions are around how media companies will manage this revolution.

Fear about automation in the creative arts is no more a new concept than attempts to automate creativity. Kurt Vonnegut’s first novel depicted a dystopian future of automation, and was titled Player Piano after a popular early twentieth-century musical instrument which could ‘play itself’. Similar fears exist today about the impact of AI on jobs. But perhaps the biggest concerns for media companies are around intellectual property (IP), and audience trust.

Content provenance

Generative AI requires a lot of training data, and while many media industry vendors are carefully training their models on copyright-cleared material, major large language models (LLMs) have often used data scraped from the internet. This raises questions of IP ownership and the value derived, which will be played out in the courts - and eventually in legislation. The fight is already on: OpenAI signed content licensing deals with news agency AP in July 2023, and then in December with publishing giant Axel Springer. Yet only two weeks later the New York Times launched a high-profile lawsuit against OpenAI and its partner Microsoft over the copyright of training data. The outcomes of all this will be keenly watched by the owners of the world’s video content catalogues.

Meanwhile, many fear that audience trust will be eroded. The big news recently was Google joining the Coalition for Content Provenance and Authenticity (C2PA), an alliance aiming to set standards for proving genuine content, to aid in the fight against AI fakes. But there is a debate to be had about just how - and how often - audiences ought to be informed about legitimate uses of AI in TV content.

Media companies are dealing with the same issues as any enterprise, notably the fact that their staff are using AI whether sanctioned or not. Just as IT departments have had to learn to deal with Shadow IT (the fact that business teams acquire their own unsanctioned software) so we are now seeing the emergence of Shadow AI. Many are rushing to write policies to manage this fast-moving space.

With so much going on, no operations or technology executive in a media company can afford to ignore the impacts of AI. The good news is that most certainly aren’t. They’re taking it very seriously, considering all the challenges, risks, benefits and opportunities it can offer. That’s why it’s so important to share perspectives and best practices. At the DPP, we’re privileged to help them to do so.

AI in Media: What does good look like is now available to download for free to DPP members.

Read more Momentum builds behind content credentials to combat AI deepfakes

No comments yet