Last week, Deepa Subramaniam, Adobe’s Vice President of Product Marketing for Creative Cloud, was excited to reveal Adobe’s Firefly generative AI model at Adobe MAX London. Michael Burns questions her about the fears and hopes this new influence will have on post production.

“Firefly debuted last March. It’s barely over a year old, yet it’s been incredible to see the reception to the foundational models – we have an image model, we have a vector model, we have a design model, and we’re working on a video model, which we’ll bring to market this year,” Subramaniam told IBC365 on the eve of the MAX event in Battersea. Subramaniam heads up a team driving Adobe’s digital imaging, photography, video, and design strategies forward: “The excitement and enthusiasm and adoption from the community [towards Firefly] have been awesome, and all of that development has happened through open public betas.”

The Firefly model integrates AI image generation into Adobe’s creative applications, shown at MAX running in Adobe Photoshop, Lightroom, Illustrator, InDesign, and Adobe Express. But what will be of most interest to IBC365 readers is a sneak preview of a new video model that promises AI-powered post production capabilities in Premiere Pro.

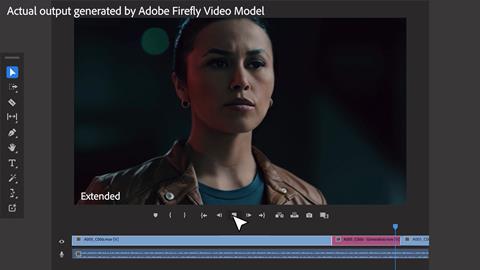

In a slick video presentation, Adobe showcased a Generative Extend feature that adds frames to extend clips, enabling improved editing, longer shots, and smoother transitions. AI tracking and masking functionality will allow objects to be added or removed within the footage. Adobe says this will enable editors to quickly remove unwanted items, change an actor’s wardrobe, or add set dressings such as a painting or photorealistic flowers on a desk. It might also create a bit of a concern for VFX teams.

Read more: Power of Color Symposium 2024: Flesh tones, shooting open gate, and AI in post

AI text-to-video will be able to generate entirely new footage directly within Premiere Pro from a prompt or reference images. Adobe suggests such clips can be used to ideate and create storyboards, or to create B-roll for augmenting live-action footage.

The future for third-parties

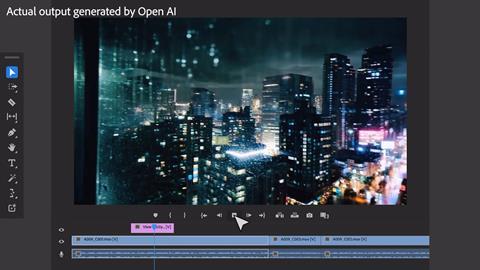

Adobe isn’t alone in exploring AI video. Indeed, the preview showed third-party video-generation models from Open AI and Runway integrated to generate B-roll, while a generative model from Pika Labs was demonstrated working with the Generative Extend tool to add a few seconds to the end of a shot.

So does this mean that Firefly will handle some aspects of content generation while leaving some aspects of video generation, particularly the heavy lifting required for B-roll to third-party players?

“Sometimes certain models are a little bit better for ideation,” says Subramaniam. “You would never want to use any of that output in production, just to get the creative juices flowing, and thinking about things. And so that’s the idea of extending the creative process by bringing in more models.

“[Firefly models] are great and they’re commercially safe and the community loves that, but we think that there’s a better world for creativity if we bring more models in.

“Exactly how the third-party mechanism gets enabled from the customer endpoint is still being figured out,” she adds. “At this point, we’re excited about the state of technology and exploring this as a research foray with those partners, and then taking it from there.”

Safety first

The ‘commercially safe’ tag crops up a lot in our conversation. It’s not surprising; there’s been a lot of heated debate around which content commercial AI models have been trained on, how results can be biased and skewed by the input data, and whether the generated content is being passed off as original human work.

“We want to have a dialogue about those fears. Understand them. Share our approach,” says Subramaniam. “We have been so open, whether it’s the actual technology being open through public betas and not releasing it until we feel it’s ready. Even just sharing that we’re working on a video model was to start eliciting that feedback: What do you want to see? What are you concerned about?”

“The bulk of people I talk to want to have commercially safe models. And what we provide, from how we set up and train our model, to deploying our model, we fully control that and can vouch for that. We offer indemnification. It’s a core part of our approach with Firefly.”

“It’s not lip service,” she continues. “From the beginning, it’s in how we are building this model, how we are bringing these workflows in.

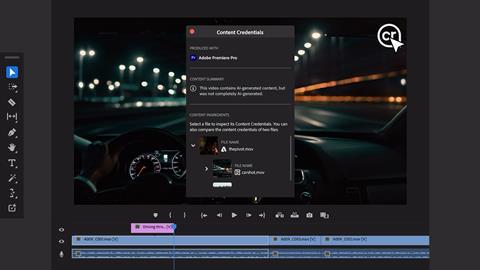

Part of this approach is Content Credentials, tamper-evident metadata that can be applied to assets at export or download. They increase transparency around the origins and history of the assets they are applied to. They’re made available through the Content Authenticity Initiative (CAI) and Subramaniam says they will be brought into Premiere Pro this year.

“I’m so excited by the adoption of content credentials and the coalition around the content authenticity initiative,” she says. “We have all the right vectors to move AI innovation forward in a responsible way and encourage others to follow suit.”

UX and transferable skills

Subramaniam gave a talk a few months ago at the Television Academy talking about AI and the creation of TV media and entertainment. “That’s a very important topic, especially on the back of the Guild strikes,” she says now. “You can see these industries starting to understand both the power of AI and generative AI and the implications of that. What I found heartening was many people I’ve been talking to are not just coming up with those concerns and backing away, but are saying, ‘Tell me more. And how can you help? And what do I need to know? And help me understand this so I can use this technology but use it in a more informed way.’”

This ties into what she reveals about the user experience (UX) currently being planned by the product team for the video model.

“We need to make the person using Premiere understand which model they’re using, and the implications of those models,” she explains. “You could see us going down a path where we might guide the user: ‘what are you trying to do? Are you trying to ideate more? Okay, then here’s the right set of models for that’. Or if you’re creating for production, then let’s make sure you’re aware of the models that you have a choice from and you understand that you’re about to create something that you’ll actually [deploy]. That’s the sort of UX elements that we’re thinking through.”

As Subramaniam points out, a lot of video editors also use Photoshop, and the inference is that the UX for AI in Premiere will be similar to the other Creative Cloud tools.

“We are very cognizant of the fact that there’s a lot of movement of our customers across those applications,” she says. “Photoshop is very powerful and critical for video workflows, whether it’s the actual video workflows or just the still imagery you create to pitch your video. Our design team and our AI team work together so that with these concepts, whether it’s on Firefly.com or in the Pro apps or Adobe Express, there’s some continuity. So you’re not relearning the wheel.”

Wild, wild west

Subramaniam stressed that all the generated content in the preview video was real output, not only from the Firefly video model but also from the third-party models. If you’ve had any experience generating AI image content from scratch, particularly with early AI models, you might have noticed that the results are frequently not that great. When I suggested it could be similar for video, Subramaniam acknowledged that “the video models are definitely the new frontier”.

“Image models have really matured; now everyone’s thinking about more difficult mediums like video and audio,” she adds. “We’re part of that cutting-edge research. We’ve been sneaking some stuff out around auto dubbing and being able to use our voice model to translate very short clips into different languages. These are very simple, very straightforward use cases. Content being created [for production] has to be globalised at a pace and a scale that can be tough. So we’re always pushing the boundaries of research, but thinking clearly about how to bring it into the applications.”

While it’s still early days, and she admits that “there’s still a long time for text-to-video to get better”, Subramaniam says the reception to the preview has been “incredibly positive”.

“We chose the workflows that pro video editors use,” she said. “Take Generative Extend, where you can extend a video clip a few frames. [In production], if you miss the shot, you miss the shot, and it takes a lot of hours and money to [fix in post]. So people have been saying: ‘Christmas is here. Please bring us that’.

“Add and Remove is also a huge hit, especially with folks who are not as familiar with rotobrushing within After Effects; the time-saving aspect of it is huge,” she adds.

A programme to span all disciplines?

The applications for quick replacements are obvious for commercials and marketing promos, but I wondered what the reaction was from editors working on TV dramas or films.

“There has been excitement that Adobe understands how pro video editors can lose hours of their day trying to add, or remove, or extend a clip, whether you’re creating content for social media, or whether you’re creating content for a longer piece like a commercial,” agrees Subramaniam. “I haven’t yet heard from people about how they might employ it day-to-day in broadcast – I think that’s to come – but that’s also why we’re sharing this: to get the community excited so they can participate in our beta. When we do launch it, we’ll have that feedback to help us improve and harden the innovation in partnership with them.”

After Effects is an obvious next port of call for Firefly.

“We’re absolutely thinking about that,” she confirms. “We love all of our video applications, not just Premiere alone. I talked a bit about audio innovation with generative AI and bringing that into other applications. Our video platform is robust. We’ll be innovating that with generative AI.”

“The pace of innovation and research is so fast,” Subramaniam adds. “We’re very excited about the level of quality we could achieve when bringing a commercially safe video model to market this year.”

Deepa Subramaniam has spent over ten years at Adobe, first as a Computer Scientist and later as a principal lead in the creation of Creative Cloud. She has also served as Vice President of Product and Design at Kickstarter, co-founder of Wherewithall, advising tech startups, and the Director of Product at the Hillary for America campaign.

Read more IBC Accelerators 2024: Final eight projects unveiled

1 Readers' comment