The VP of Unity Wētā Tools gets under the skin of 3D content creation, and emphasises the value of real time in conversation with Michael Burns.

Natalya Tatarchuk, Vice President of Unity Wētā Tools, isn’t looking to change the world of 3D content creation, but make it more efficient and accessible. Interviewed before her keynote appearance at IBC2023, on the eve of a substantial release of new and updated tools for the Unity platform, Unity’s Distinguished Technical Fellow and Chief Architect, and former AAA games developer, wants to “empower artists to be more effective”.

Ahead of her IBC2023 session, Bringing Wētā Tools To Real-Time, we found Tatarchuk happy to discuss how artists and studios can use real-time tools to be both more creative and productive and how AI can help replace, not artists, but those boring tasks that artists hate.

Unity, already a leading platform for creating interactive content, was boosted in 2021 by the $1.62bn purchase of Wētā Digital’s tools, pipeline, technology, and engineering talent, as well as the $130m purchase of real-time simulation and deformation specialist Ziva Dynamics. More recently, the Siggraph 2023 conference in mid-August saw the launch of the Unity Wētā Tools division for making film and real-time 3D animations and games. Tatarchuk’s IBC2023 session promises to show just what fruits these unions are bringing to artists and studios across media and entertainment.

Incredible 3D Tools

“We’ve been working for several years on what we believe are incredible tools to help people to deliver filmic quality content in real time,” said Tatarchuk. “We brought a lot of the expertise from Wētā Digital, and built on the strength of Unity, known for its accessibility, platform range, and content scalability, to enable more efficient and more ambitious projects to be achievable and accessible in real time.”

An example is the newly upgraded tool, Ziva VFX, used to build characters for Netflix’s The Sea Beast. It allows 3D character artists to rig and accurately simulate organic material: fat, muscle, skin, and other elements that make a CG creature appear more realistic to audiences. Simulating these deformations takes time, however.

“Artists will move a limb, they’ll make a change, but they’re not able to see the result [immediately],” said Tatarchuk. “They can wait for hours.”

The new version 2.2 of Ziva VFX taps into the GPU capability of the workstation to compute complex deformation rigs. It allows artists to get those results in seconds.

“We’re talking about character rigs for tentpole movies like The Avengers, or creatures in The Witcher, but you don’t have to fire off a big expensive job in the render farm, you’re not paying for expensive cloud, you’re doing it on a GPU, one that powers a normal DCC [digital content creation software] workstation, and you get results in real time.”

Artists would then move on to crafting the character’s performance, but Unity has this covered too: Ziva Real-Time uses AI to speed things up. It allows you to import a relatively small number of different animated frames, using them to train a neural network in about five minutes.

“These [creatures or characters] can have complex deformer nodes, so changing a pivot point usually takes anywhere from 10-15 minutes to half an hour to do, but all the artist sees is the raw skeleton movement,” explained Tatarchuk. “So, they’re not really working with the performance; they’re working with an abstraction of the performance.

“Of course, CG artists have been great at [working that way] but it’s not a satisfying workflow,” she added. “With Ziva RT, they can plug that deformer node into our trained-up AI network, and now they get the full simulation as they’re animating, crafting at the final performance quality.”

Animators working in Ziva Real-Time trials in studios like DNEG have had “their minds blown,” she reported. “The animators work faster, but most importantly, they’re [working accurately] because they’re looking at the thing as it will be finally simulated. That is a completely different experience, which frankly is revolutionary for them.”

Faster Iteration, Faster Results

Tatarchuk said such real-time tools allow artists to stay longer in the creative flow.

“That magical space is enabled because they’re not exiting to get a coffee, or to check their email, or because the machine goes to sleep,” she stated. “They’re constantly in the flow of dreaming about their performance, then seeing and crafting it.

“This also matters to studios,” she added. “They get results faster, iterate faster, complete projects faster, and save on production costs.”

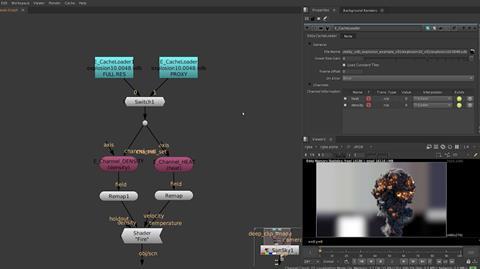

Also newly available are impressive tools that derive from Wētā production pipelines. Wig is a highly customisable hair and fur authoring tool for Maya with a USD-based plug-in, while there are two add-ons for Nuke: flexible compositing plug-in DeepComp allows scenes to be edited without re-rendering the entire scene; while Eddy 3.0 can create, render, and denoise volumetric effects like those found in Avatar 2: The Way of Water, with instant visual feedback.

There’s also a new version of procedural vegetation modeller SpeedTree with more accessible creative control, now able to work within the pipeline of any real-time engine, not just Unity.

“One of the philosophy principles that we adhere to very strongly is to not dictate the pipeline,” said Tatarchuk. “We’re not here to tell the artists what DCC they should work on, what workflows they should switch to. They have keyboard shortcuts that they’ve been accustomed to for many years, and we want to respect their muscle memory.”

Democratisation of Top-Tier Tools

It’s not just for top-flight artists. “As well as wanting to empower professional artists to help them be more efficient, Unity also believes that the world is better with more creators,” said Tatarchuk. “There are many people who are afraid to be artists because they feel they lack sufficient skills and knowledge and training. So what we’re also investing in is taking these amazing professional tools, and making them more accessible. People who may not have dreamt of themselves as creators, because they didn’t have the hundreds of hours of training due to cost or availability, can create art with the same fidelity and the same quality [as professionals], in a much easier and accessible way.”

This isn’t dumbing down, she stressed. “We want the people who use these accessible tools to be equally comfortable whether they are Wētā level experts, or not.”

One such tool is Ziva Face Trainer (ZFT), a cloud-based application which Tatarchuk said allows you to bring in a 3D mesh of a face and get back a fully rigged puppet, with no skills of rigging required, in under an hour. The latest version can use data captured from live performances or even just a phone, returning the rigged model in just a day for use in any real-time project.

“It usually takes four to six months for somebody with deep expertise in complex facial rigging to do that,” she said. “They’re rare skills. Now, if you’re an expert, you get a fast-starting point, easy and inexpensive, to bring it into a DCC [where] you can iterate further and make it your own. If you’re not an expert, you can plug it into Unity and just get going with it. That gets people into the whole workflow.

“I’m seeing a huge momentum and a desire to communicate and share experiences through 3D interactively, so we’re focused on the ability to create interactive 3D experiences quickly without the technological barrier,” Tatarchuk added. “Professional artists are extraordinarily technical human beings. The majority of the world isn’t. We want to bring that power of creativity that those creators enjoy but make it accessible so anybody can create something quickly.”

AI: Changing the Narrative

The next real-time innovation concerns emerging behaviour, based on Unity’s Sentis, a solution under development for embedding AI models into a real-time 3D engine. “We’re going to be able to talk to a character and engage with it, where all of the animation is completely ‘uncanned’,” said Tatarchuk. “We’re going to generate it dynamically based on [the actor/user/gamer] talking to that character and then creating the performances and emotions dynamically.

“It changes how you think about [the] story,” she added. “A story is going to be unique to me. In a game, my experience is going to be crafted by me when I engage with a character. If I’m a creator for film, I’m going to be able to iterate on the story so much faster. It’s the true art of storyboarding at your fingertips.

“We honestly believe there are a lot of people who have amazing ideas – we want to give them all the power and the tools to bring these ideas to life and to help them reach the wider audience. People want to have quick access to these creation tools, they want to pick them up, play, and see what they can do.”

Join Natalya Tatarchuk at IBC2023 as she discusses groundbreaking work in character, environment, and rendering pipelines on 16 Sep 2023, 11:00 - 11:30 in the Forum.

No comments yet