It’s almost impossible to cross the IBC2023 halls this year without overhearing conversations about AI and where it might be leading the broadcasting ecosystem. So it’s no surprise that the Content Everywhere panel discussion, ‘The opportunities and limitations for AI’, was overflowing with ears keen to hear what conundrums AI will bring.

Moderated by Colin Dixon, Founder and Chief Analyst, nScreenMedia, the panel invited Phani Kolaraja, CEO, CuVo, Radu Orghidan, Principal Data Scientist, Endava and Stuart Huke, Head of Product 24iQ - 24i to share their take on the pros and cons of a data driven future for broadcasting.

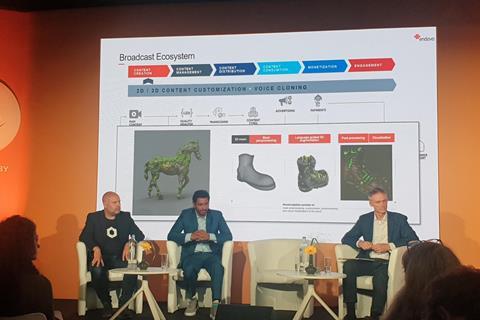

Three presentations opened the discussion, firstly from by Orghidan, who outlined the two types – Conventional and Generative – and then laying out the different stages of the broadcast ecosystem and how AI can benefit them all, from content creation to engagement.

He talked of the opportunities AI can create in terms of Interactive experiences, by building materials and choose “your own adventure,” via integrating games, live interactivity, VR and AR, and social interaction.

Bumps in the road

Next Orghidan clarified the strengths in current AI development, from highly personalised content and targeted advertising; as well as the threats and weaknesses such as privacy concerns and technology limitations. When asked by Dixon on how we can avoid content bubble and the dreaded echo chamber, Orghidan reminded us that as a user we should employ our critical thinking and not accept everything AI feeds us: “From a tech point of view, the creators have to observe biases and the models should be transparent enough to enable understanding that there might be some going into some narrow corners from which the bubble grows.”

————-

– IBC2023 Live Updates

– Exhibitor List & Floor Plan

– What’s on? Browse the Content Agenda

– Party & Event List (via Broadcast Projects)

– What to do in Amsterdam after hours

————-

Next up was Kolaraja, who emphasised the importance of ethical usage of AI, that developments need to be based on a foundation of privacy and trust: “asking users to trust and then maintaining their privacy.”

He went on to cover using data at large scale to drive hyper personalisation, whilst still “ensuring that we are maintaining the trust of all the consumers…that’s where the responsibilities come in.”

Huke then focussed his presentation on the power of personalisation, which “can’t be ignored… Metadata is still a big issue in the industry in terms of getting consistent metadata. And obviously generative AI wouldn’t have a big impact in terms of enriching, creating new metadata, and getting the data in a state where algorithms can do their magic.”

More Time for Creativity

All panellists covered slightly different areas of where AI is impacting the industry, other areas discussed included user interfaces where people are being able to play and learn with the tech themselves.

Another opportune area brought up by Phani was that of hyper personalisation, without compromising on privacy and trust. Huke reflected on the pace of development of AI could allow the data community to focus less on R&D, therefore “freeing up a lot more time for developers to be creative… I think that in itself is a big positive in terms of industry… as a whole, in terms of the pace of development over time.”

On the topic of generating data and enhancing metadata, Orghidan talked of the benefits of understanding customers that are, for example, viewing a certain live stream, “So these technologies that are able to enhance our capabilities and make the analytics easier, I think are really interesting.”

Kolaraja brought up the IBC Accelerator project that CuVo are Participants of: Responsive Narrative Factory: “Tagging metadata and using it for predictive analysis or predictive viewing. Let’s say if you as a viewer, do not want to watch anything, you know, that would be disturbing for you, you can go into the actual video and use those predictive tagging to say filter out elements that you will not want to watch,” therefore making the content clean for a range of audiences.

Trust is a fine line

However this idea brought up another challenge: trust and AI, for example with news organisations, how do we filter content that may be disturbing?

“You know, masking things live is going to be difficult right? The majority of what we can do… so far, the technology has only evolved to be able to use historical elements to be able to predict what happens right?” Kolaraja contemplated, “But if there is a live event that is happening, the challenge is: how do we how do we take decisions live based on what has happened in the past? For that to happen (in) real time, we need to be able to trust in the folks that are actually preparing the feed… but it is a difficult one to solve something I don’t have a solution for it, but maybe that’s what we should be looking at and see. That’s the opportunity.”

Orghidan added: “Two things: that this technology is really interesting, (it) is one of the only technologies that are able to take decisions and to create content. So these two abilities can be or have to be mitigated because if you let the AI take decisions on what to show or not, then you can arrive into some kind of bias that’s embedded into the AI on one hand, and if you let the AI create things, also unsupervised, that’s also dangerous. So, I mean, these two things have to be taken into account and mitigating (is) always having a human in the loop. That would be my advice.”

Dangerous Horizons?

Clearly no platform is going to let AI run wild on their system, upsetting users is not the goal. Human control of AI content is still key for safe and quality content.

As Huke noted: “That’s one of the big ones in terms of, I think users – they’ve got to trust the outcome. And I think that’s where there’s still some question marks over scalability and some of this technology in terms of its application. I think that’s where a lot of solutions get to be sort of finalised… the concept of it in its inception. Inception is a good one. But actually, is it scalable? Is it something that can be translated into a usable format and something again, that the users are going to accept and trust and that goes back down?”

Forward Thinking

Refusing to yield to a “dystopian view on technology,” Orghidan summarised his concerns with AI: Acceptance – or rather not accepting too much and rejecting our own critical thinking; Drift – ML models that used to work well but have inevitably outdated, when “the output stops reflecting the reality”; and manipulation – anthropomorphising the models which can lead to human manipulation.

Other limitations, suggested by Huke included latency, bots taking time to calculate answers in a world where users are used to instant results. Kolaraja then considered that “processing and computing is still pretty pricey - I’ve seen it in use cases. Technology still needs to evolve in this space where it becomes affordable to be able to process to, the closest to real time right at scale,” adding that less more accessible technology which could be utilised for education: “ In spaces like this where we were able to make this be available for masses at a cheaper rate that schools can afford …hopefully we’ll get there.”

Dixon concluded with the takeaway: “We’ve only just begun in seeing how AI is impacting us.”

Read more Generative AI in media and entertainment

No comments yet